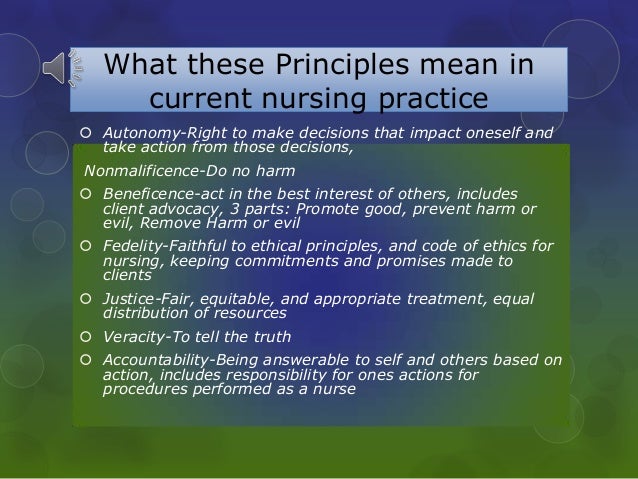

Discussion has focused mostly on questions of how developers, manufacturers, authorities, or other stakeholders should minimize the ethical risks – discrimination, privacy protection, physical and social harms – that can arise from AI applications. The principle of nonmaleficence is reflected in the Hippocratic oath in words, First do no harm (Summers, 2009, p. Generally, AI ethics have been primarily concerned with the principle of non-maleficence. Beneficence encourages the creation of beneficial AI (“AI should be developed for the common good and the benefit of humanity”), while non-maleficence concerns the negative consequences and risks of AI. Although these two principles may look similar, they represent distinct principles. The principle of beneficence says “do good”, while the principle of non-maleficence states “do no harm”. Often explained as 'above all do no harm', this principle is considered by some to be the most critical of all the principles, even though theoretically they are all of equal weight (Kitchener, 1984 Rosenbaum, 1982 Stadler, 1986).

Finally, the principle of autonomy is translated into a specific obligation to promote and respect patient choice. AI Ethics is effectively a microcosm of the political and ethical challenges faced in society.” Nonmaleficence is the concept of not causing harm to others. The principle of nonmaleficence implies an obligation to guarantee patient safety, whereas the principle of beneficence implies an obligation for health care networks to guarantee continuity of care in all its dimensions.

“AI inevitably becomes entangled in the ethical and political dimensions of vocations and practices in which

0 kommentar(er)

0 kommentar(er)